In adversarial attacks intended to confound deep learning models, most studies have focused on limiting the magnitude of the modification so humans do not notice the attack. On the other hand, during an attack against autonomous cars, for example, most drivers would not find it strange if a small insect image were placed on a stop sign, or they may overlook it. In this paper, we present a systematic approach to generate natural adversarial examples against classification models by employing such natural-appearing perturbations that imitate a certain object or signal. We first show the feasibility of this approach in an attack against an image classifier by employing generative adversarial networks that produce image patches that have the appearance of a natural object to fool the target model. We also introduce an algorithm to optimize placement of the perturbation in accordance with the input image, which makes the generation of adversarial examples fast and likely to succeed. Moreover, we experimentally show that the proposed approach can be extended to the audio domain, for example, to generate perturbations that sound like the chirping of birds to fool a speech classifier.

We first generated adversarial examples using a perturbation imitating moth images that made a pretrained road sign classifier recognize them as "Speed Limit 80."

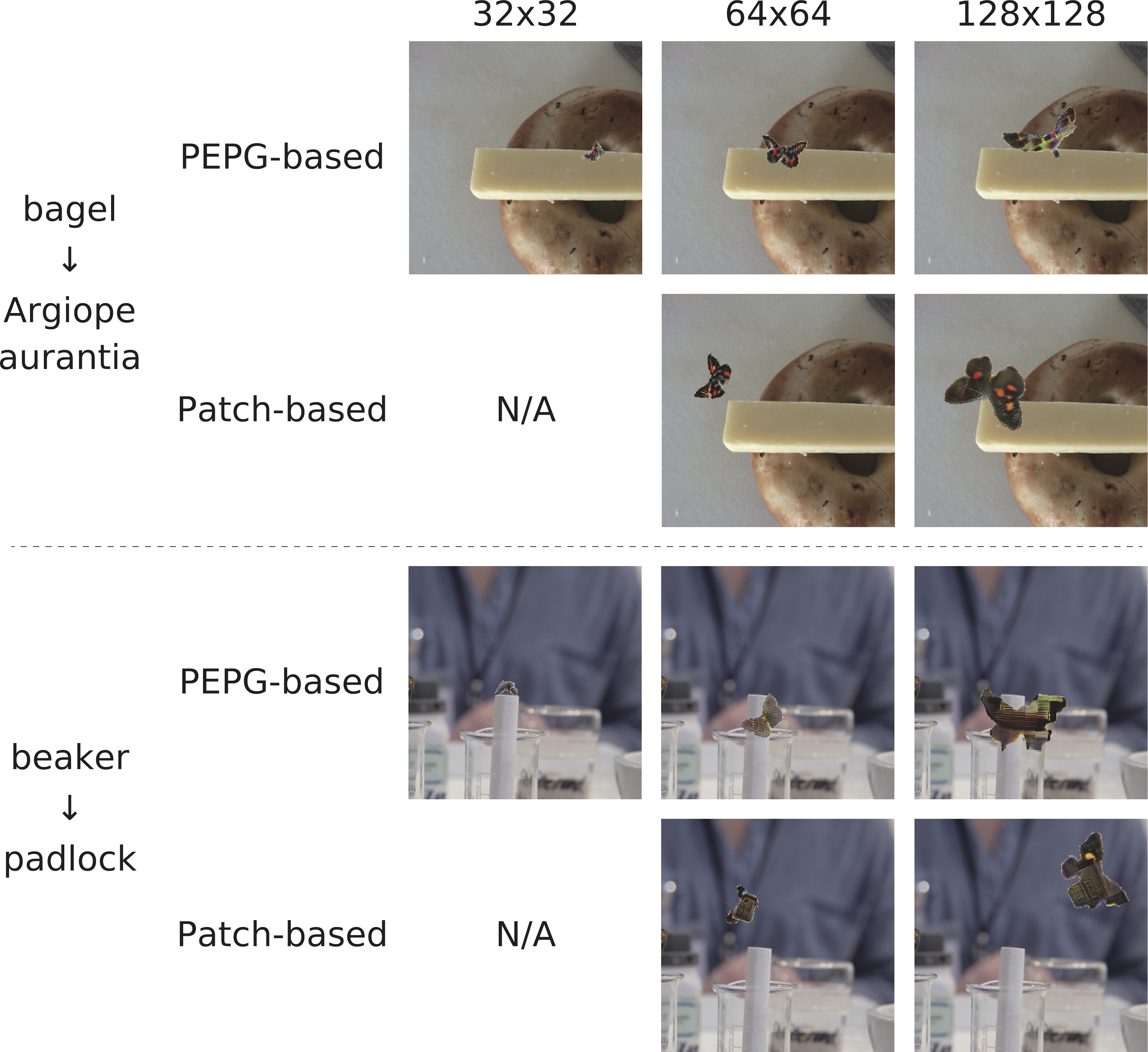

We also generated adversarial examples against a pretrained ImageNet classifier.

We generated adversarial examples using a perturbation imitating birds chirping that made a pretrained speech command classifier recognize them as "STOP."

| Original input (from Speech Command dataset) |

Adversarial example | Perturbation |

|---|---|---|

|

NO

| ||

|

YES

| ||

|

Hiromu Yakura, Youhei Akimoto, and Jun Sakuma: Generate (non-software) Bugs to Fool Classifiers.

In Proceedings of the 34th AAAI Conference on Artificial Intelligence, 2020. [Paper] [arXiv:1911.08644] |

This study was supported by KAKENHI 19H04164 and 18H04099.