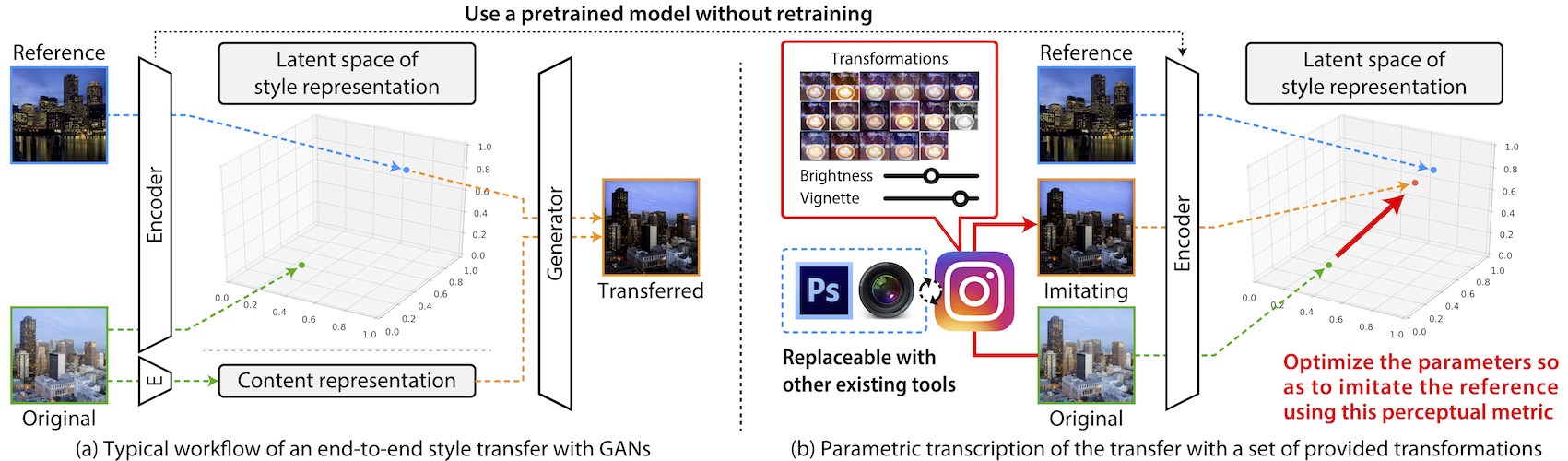

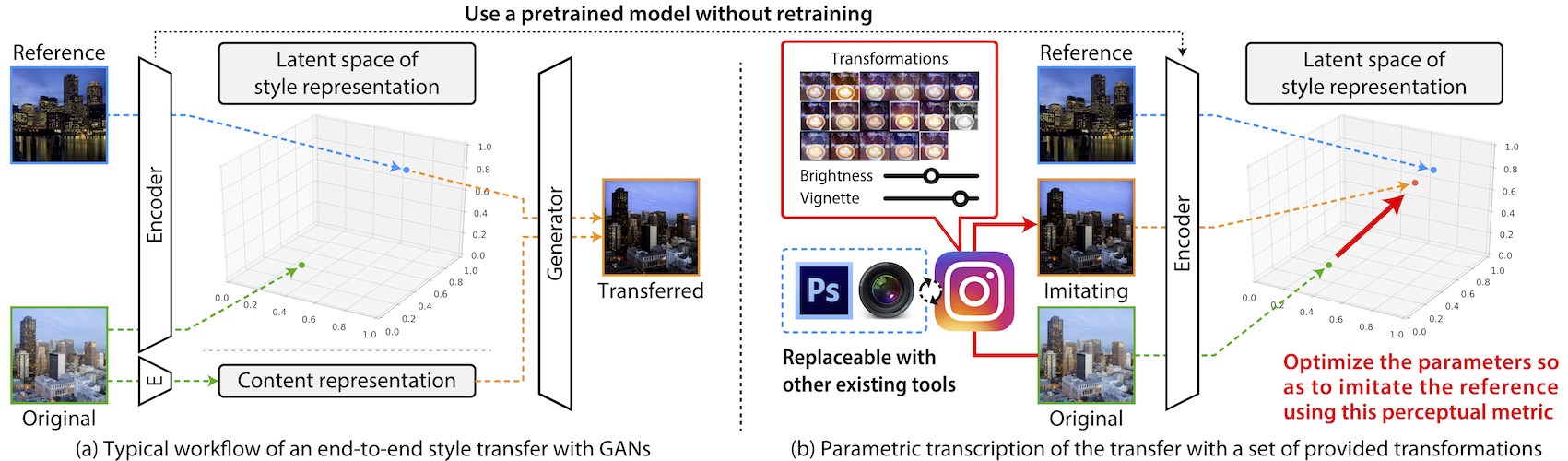

Current deep learning techniques for style transfer would not be optimal for design support since their "one-shot" transfer does not fit exploratory design processes. To overcome this gap, we propose parametric transcription, which transcribes an end-to-end style transfer effect into parameter values of specific transformations available in an existing content editing tool. With this approach, users can imitate the style of a reference sample in the tool that they are familiar with and thus can easily continue further exploration by manipulating the parameters. To enable this, we introduce a framework that utilizes an existing pretrained model for style transfer to calculate a perceptual style distance to the reference sample and uses black-box optimization to find the parameters that minimize this distance. Our experiments with various third-party tools, such as Instagram and Blender, show that our framework can effectively leverage deep learning techniques for computational design support.

|

Hiromu Yakura, Yuki Koyama, and Masataka Goto: Tool- and Domain-Agnostic Parameterization of Style Transfer Effects Leveraging Pretrained Perceptual Metrics.

In Proceedings of the 30th International Joint Conference on Artificial Intelligence (IJCAI), 2021. [Paper] [arXiv:2105.09207] |

This work was supported in part by JST ACT-X (JPM-JAX200R) and CREST (JPMJCR20D4), Japan.